Get to know the client

First some background into the client’s eCommerce website. The eCommerce platform is powered by Optimizely CMS and Optimizely Commerce, running on the latest versions guaranteed by the in-place Application Lifecycle Management process. The catalogue items are indexed in the Optimizely Find search engine cloud service. The index is updated during the daily scheduled product import. The eCommerce application is hosted entirely in Azure as several WebApps: one WebApp for Optimizely Commerce (1 instance) and two WebApps for the Optimizely CMS (each with several instances on S2 Large). The Optimizely CMS has been set up for the seven countries the retailer is active in, including the Netherlands, Belgium, France, Germany, Luxembourg, Austria and Poland.

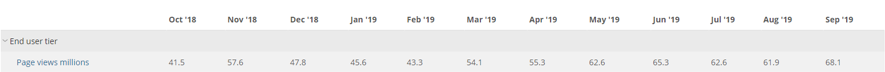

The number of website visitors is growing rapidly, reaching between 60 and 70 million pageviews per month. The most visited country site is that of the Netherlands with around 11.000.000 pageviews per month. Across all country sites, the number of page views averages 3.5 million per month. There is a peak load on the website when the weekly newsletter is sent out, with up to 60 percent more traffic than on a normal day.

Figure 1: Website pageviews per month

Figure 1: Website pageviews per month

The initial set-up and challenge

Using scheduled jobs for the catalogue import process in Optimizely Commerce

The commerce entities, such as the product catalogue, prices and availability, were imported into the Optimizely Commerce application through an asynchronous import of separate XML files, delivered by the client’s backoffice systems. The Optimizely Scheduled Jobs functionality was used to retrieve the XML files from the FTP server and import these items into the eCommerce catalogue. The execution of these jobs was loosely scheduled without a direct relation between the different jobs. The product completeness rules were also implemented in the CMS application so the Optimizely catalogue could contain products that could or should not be displayed on the webshop. The same could also be said for Optimizely Find that also contained these unpublished products.

The stability of the platform is directly affected by the import process

The situation described in the previous paragraph illustrates the fact that the import processes were an integral part of the content management and content delivery instances of Optimizely CMS. Any issues with these scheduled jobs would have a negative impact on the production environment of the eCommerce website, because essentially the same instances were tasked with different responsibilities. And unfortunately, there were indeed some serious issues that would impact the performance and uptime of the platform.

Optimizely Find in combination with a high traffic website

A standard implementation of the Optimizely Commerce platform uses Optimizely Find extensively. Any pages that displays product information will require calls to the Optimizely Find service. During the import it became clear that an update of a product would also require up to 5 different calls to the Optimizely Find service. Disabling the indexing process during the impact proved unsuccessful. The requests to Optimizely Find by website traffic combined with the indexing request for the imported products caused the Optimizely Find service to return '429 too many requests' responses back to the application. This caused some serious request backups to the application. Eventually, due to the large number of error counts, Azure would restart the Optimizely instance automatically, thereby directly impacting the uptime of the platform.

Because the import could only be done in large batches, if the Optimizely instance was restarted by Azure, the import would need to be restarted in its entirety. Obviously, this could not be done at any random time because of the expected peak loads on the website.

The import through Optimizely jobs also provided limited monitoring data as it only provided the final output and sometimes even did not gave an alert when the job failed to run or complete. A lot of time was spent determining the publication (or lack thereof) of products and troubleshooting issues was a very frustrating process.

The issues with Optimizely Find were somewhat mitigated by moving the Index over to a new cluster on Azure, extending the QPS (Query Per Second) and by subsequent Optimizely Find updates. But the decision was eventually made to change the import architecture to bring improved performance, stability, dependability and predictability to the client’s online platform.

The solution

Defining the parameters to a successful import process

In order to come up with the application architecture that would bring improved performance, stability and dependability to the client’s online platform, first application requirements were formulated that the new process should adhere to. These were stated as follows:

- The import process should have minimal dependencies with, and impact on, the eCommerce website and CMS;

- It should be possible to run the import process multiple times a day even on an hourly basis;

- The import process should be able to run during peak visitor load;

- The import process should be executed logically instead of scheduled within pre-defined intervals;

- The imports must be quicker than in the previous situation;

- The solution should be set up to accommodate single item updates in the future;

- The solution should add traceability to the imported items and the overall import process;

- The monitoring, alerting and reporting function should be improved.

The new import process architecture

As the first part of the solution, the import processes and the indexation process were isolated in a separate and dedicated Optimizely CMS instance. This instance would be solely responsible for the Optimizely scheduled jobs and would not be part of the Content Management and Content Delivery function. Any issues with these processes would not have any impact on the production environment, guaranteeing an improved uptime of the eCommerce website. Adding this dedicated layer was a part of good architectural design principles.

The dependency between the scheduled jobs was created by creating a so-called ‘Master Import Job’ that would centrally trigger the different separate import jobs. The new job was tasked with monitoring the individual jobs and sending out detailed alerts when a job failed to a select list of recipients.

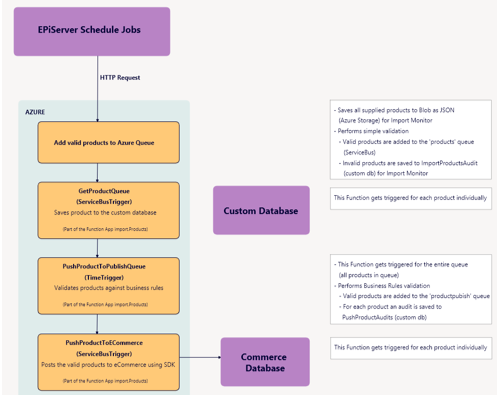

The import application logic was removed from the Optimizely Scheduled Jobs and instead several Azure Functions were created, each with their own responsibility in the import process. An Azure Function is a serverless computing service hosted on the Microsoft Azure public cloud. Azure Functions is designed to accelerate and simplify application development. An Audit logging table was created for each function so that proper logging would be in place for each step in the import process.

Figure 2: New product import flow

Figure 2: New product import flow

The Scheduled Jobs in Optimizely make HTTP requests to the Azure Function that is responsible for retrieving the products from the FTP server and storing them in an Azure queue. The following Function App would retrieve the items from the queue and store them in a temporary table. A third Function App would retrieve the products that are publishable based on the completeness rules from the table and add them to the Optimizely Catalogue using the Optimizely SDK as the Content API is not yet usable for Optimizely Commerce objects.

In this solution each Product is imported one by one which offers the opportunity to import specific products if the import fails somewhere down the import line. It would no longer be necessary to redo the whole import process if one items failed. Azure Functions are more reliable and more efficient than using the Scheduled Jobs offered by Optimizely. Azure Functions are also easier to maintain because each function has a single responsibility.

The advantages of this solution are:

- Separation of logical applications;

- Considerably less load on Optimizely CMS and eCommerce website;

- It makes optimal usage of Optimizely functionality;

- The import can be run independent of time and multiple times a day;

- The import is handled as a logical entity;

- It introduces robustness, flexibility and scalability to the architecture.

The conclusion

Basically, what we could learn from this process is that it is important to fit software systems into the solution architecture based on their strengths not what is offered or possible as an extra. Optimizely CMS and Optimizely Commerce excel in personalized content delivery and eCommerce capabilities. But it is very important not to make required background processes detrimental to these capabilities.